- Sra Toolkit Install

- Hipaa Sra Toolkit

- How To Use Sra Toolkit For Mac Pro

- Sra Toolkit Manual

- Sra Toolkit Windows

The NCBI SRA SDK generates loading and dumping tools with theirrespective libraries for building new and accessing existing runs.

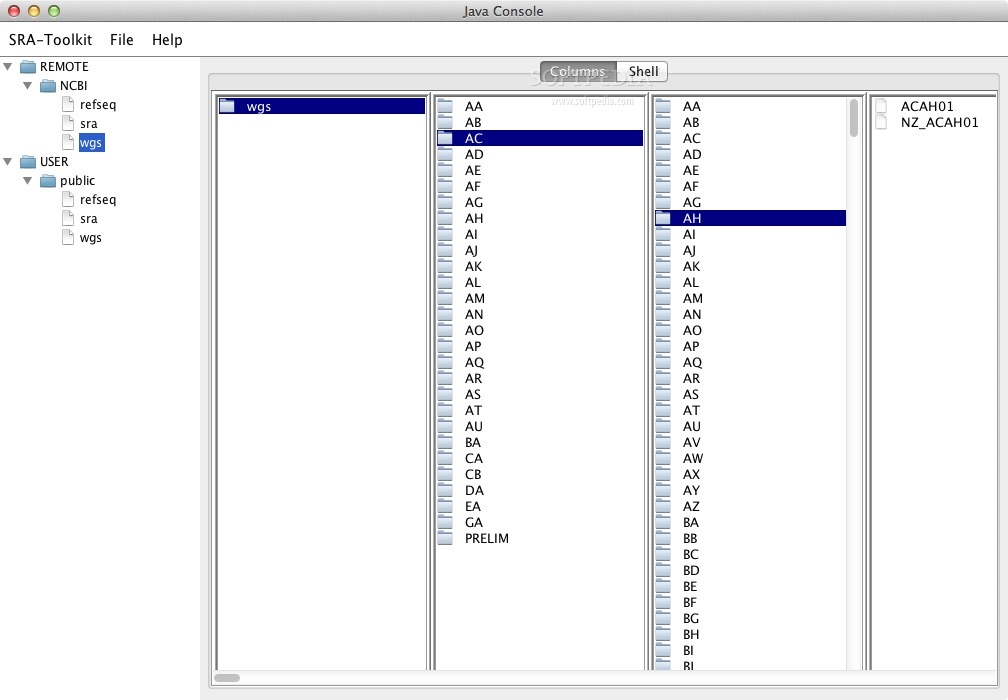

Configuring the Toolkit. If you are using SRA Toolkit version 2.4 or higher, you should run the configuration tool, located within the bin subdirectory of the Toolkit package. Go to the 'bin' subdirectory for the Toolkit and run the following command:./vdb-config -i. This tool will setup your download/cache area for downloaded files. The SRA Toolkit and SDK from NCBI is a collection of tools and libraries for using data in the INSDC Sequence Read Archives.

- Command line help: Type the command followed by '-h'

- Module Name: sratoolkit (see the modules page for more information)

- fastq-dump is being deprecated. Use fasterq-dump instead -- it is much faster and more efficient.

- fasterq-dump uses temporary space while downloading, so you must make sure you have enough space

- Do not run more than the default 6 threads on Helix.

- To run trimgalore/cutadapt/trinity on these files, the quality header needs to be changed, e.g.

- fasterq-dump requires tmp space during the download. This temporary directory will use approximately the size of the final output file. On Biowulf, the SRAtoolkit module is set upto use local disk as the temporary directory. Therefore, if running SRAtoolkit on Biowulf, you must allocate local disk as in the examples below.

SRA Data currently reside in 3 NIH repositories:

- NCBI - Bethesda and Sterling

- Amazon Web Services (= 'Amazon cloud' = AWS)

- Google Cloud Platform (GCP)

Two versions of the data exist: the original (raw) submission, and a normalized (extract, transform, load [ETL]) version. NCBI maintains only ETL data online, while AWS and GCP have both ETL and original submission format. Users who want access to the original bams can only get them from AWS or GCP today.

In the case of ETL data, Sratoolkit tools on Biowulf will always pull from NCBI, because it is obviously nearer and there are no fees.Most sratoolkit tools such as fasterq-dump will pull ETL data from NCBI. prefetch is the only SRAtoolkit tool that provides access to the original bams. If requesting 'original submission' files in bam or cram or some other format, they can ONLY be obtained from AWS or GCP and will require that the user provide a cloud-billing account to pay for egress charges. See https://github.com/ncbi/sra-tools/wiki/03.-Quick-Toolkit-Configuration and https://github.com/ncbi/sra-tools/wiki/04.-Cloud-Credentials. The user needs to establish account information, register it with the toolkit, and authorize the toolkit to pass this information to AWS or GCP to pay for egress charges.

If you attempt to download non-ETL SRA data from AWS or GCP without the account information, you will see an error message along these lines:

Magic engine 1.1.3 key. fasterq-dump takes significantly more space than the old fastq-dump, as it requires temporary space in addition to the final output. As a rule of thumb, the fasterq-dump guide suggests getting the size of the accession using 'vdb-dump', then estimating 4x for the output and 4x for the temp files. For example: Based on the third line, you should have 650 MB * 4 = 2600 MB = 2.6 GB for the output file, and 2.6 GB for the temporary space. It is also recommended that the output file and temporary files be on different filesystems, as in the examples below.

You can download SRA fastq files using the fasterq-dump tool, which will download the fastq file into your current working directory by default. (Note: the old fastq-dump is being deprecated). During the download, a temporary directory will be created in the location specified by the -t flag (in the example below, in /scratch/$USER) that will get deleted after the download is complete.

For example, on Helix, the interactive data transfer system, you can download as in the example below. To download on Biowulf, don't run on the Biowulf login node; use a batch job or interactive job instead.

1. Create a script file similar to the one below.

2. Submit the script on biowulf: Note: this job allocates 30 GB of local disk (--gres=lscratch:30) and then uses the flag -t /lscratch/$SLURM_JOBID to write temporary files to local disk. If you do not allocate local disk and use the -t flag, the temporary files will be written to the current working directory. It is more efficient for your job and for thesystem as a whole if you use local disk. See below:

| Command | TMPDIR | Output Directory | Time |

| time fasterq-dump -t /lscratch/$SLURM_JOBID SRR2048331 | local disk on Biowulf node | /data/$USER | 49 seconds |

| time fasterq-dump SRR2048331 | /data/$USER | /data/$USER | 68 seconds |

NOTE: The SRA Toolkit executables use random access to read input files. Because of this, users with data located onGPFS filesystems will see significant slowdowns in their jobs. For SRA data (including dbGaP data) it is best to first copy the input files to a local/lscratch/$SLURM_JOBID directory, work on the data in that directory, and copy the results back at the end of the job, as in the example below. Seethe section on using local disk in the Biowulf User Guide.

Using the 'swarm' utility, one can submit many jobs to the cluster to run concurrently.

Set up a swarm command file (eg /data/username/cmdfile). Here is a sample file that downloads SRA data using fasterq-dump

If you have previously downloaded SRA data into your own directory, you can copy those files to local scratch on the node, process them there, then copy the output back to your /data area. Sample swarm command file:

The --gres=lscratch:N must be includedin the swarm commands to allocate local disk on the node. For example, to allocate 100GB of scratch space and 4GB of memory:

For more information regarding running swarm, see swarm.html

Sra Toolkit Install

Allocate an interactive session and run the interactive job there.

Using the 'swarm' utility, one can submit many jobs to the cluster to run concurrently.

Set up a swarm command file (eg /data/username/cmdfile). Here is a sample file that downloads SRA data using fasterq-dump

If you have previously downloaded SRA data into your own directory, you can copy those files to local scratch on the node, process them there, then copy the output back to your /data area. Sample swarm command file:

The --gres=lscratch:N must be includedin the swarm commands to allocate local disk on the node. For example, to allocate 100GB of scratch space and 4GB of memory:

For more information regarding running swarm, see swarm.html

Sra Toolkit Install

Allocate an interactive session and run the interactive job there.

NCBI's database of Genotypes and Phenotypes (dbGaP) was developed to archive and distribute the data and results from studies that have investigated the interaction of genotype and phenotype in Humans. Most dbGaP data is controlled-access. Documentation for downloading dbGap data.

If you are having problems with dbGaP downloads, please try this test download. It accesses a copy of public 1000 Genomes data at NCBI. This is to confirm whether it is a general problem, or specific to your configuration, or specific to the accessions you are trying to download.

Hipaa Sra Toolkit

Changes with SRAToolkit v2.10.*: It is no longer necessary to be in a specified repository workspace to run the download, and the ngc file is provided on the command line.

On a Biowulf node:

How To Use Sra Toolkit For Mac Pro

You should see two files called SRR1219902_dbGaP-0.sra and SRR1219902_dbGaP-0.sra.vdbcache appear in /data/$USER/test-dbgap/

As of v 2.10.4, the SRAToolkit contains the following executables:

By default, the SRA Toolkit installed on Biowulf is set up to use the central Biowulf configuration file, which is set up to NOT maintain a local cache of SRA data. After discussion with NCBI SRAdevelopers, it was decided that this was the most appropriate setup for most users on Biowulf. The hisat program can automatically downloadSRA data as needed.

In some cases, users may want to download SRA data and retain a copy. To download using NCBI's 'prefetch' tool, you would need to set up your own configuration file for the NCBI SRA toolkit. Use the command vdb-config to set up a directory for downloading. In the following example, the vdb-config utility is used to set up /data/$USER/sra-data as the localrepository for downloading SRA data. Remember that /home/$USER is limited to a quota of 16 GB, so it is best to direct your downloaded SRA data to /data/$USER.

Sample session: user input in bold.

For more information about encrypted data, please seeProtected Data Usage Guide at NCBI.

Sra Toolkit Manual

Sra Toolkit Windows

If you are getting errors while using fastq-dump and fasterq-dump, e.g.try this:This will move your existing SRA toolkit configuration out of the way, to test whether some setting in your configuration is causing the problem.